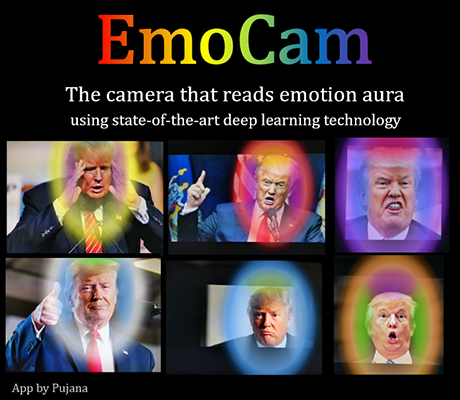

Seeing your Emotion Aura

This tool can analyze your emotions based on facial expressions and visualize them as augmented reality aura. The state-of-the-art convolutional neural networks (deep learning models) are employed for face detection and facial expression classification. Moreover, a fuzzy-logic-inspired algorithm for generating color gradients, as well as a vector-quantization-based stabilizer for noise reduction, are invented to make smooth real-time colorization. Basically, this app was built on top a combination of powerful AI algorithms.

Experience a smart camera that reads your emotion!

Emotion

- Blue: Clam-Sadness

- Green: Happy, Joyful

- Magenta: Disgust

- Orange: Confused, Surprised

- Red: Angry, Upset

- White: Neutral

- Yellow: Anxiety, Stressed, Fear

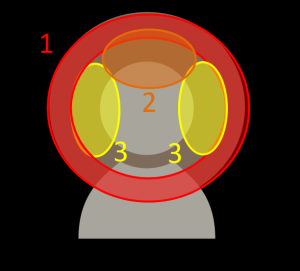

The 3 Sections

- Outer Color (1): Primary emotion, the most dominant emotion.

- Color on top (2): Secondary emotion

- Color on two sides (3): Tertiary

- Backlight color behind the three section is derived from mixing all the emotion detected.

- Color gradient (associated with radial size of aura) is calculated based on confidence value.

However, the change is made small to reduce impacts from noise. - The Stabilizer uses k-mean over sliding windows to reduce impacts from noise.

While Stabilizer is on, colors are also blended based on confidences ratio.

The 3 Sections

Errors/Issues under Fixing

Flipped Light Position

- We got reports that the light position is misplaced on some versions of mobiles.

- As a temporal solution, on setting panel, please use “Flip” buttons

- We are sorry for this inconvenience. Different versions of codes to handle camera on different Android versions (calculate default orientation/flip) must be prepared, and we are not able to handle them all at this time.

Duplicated Lights

- On some devices, it is noticed that light become more intensive as switching between front and back cameras.

- We also found this cause detection accuracy drop.

- We will fix this as soon as possible. Please be patient and sorry for your inconvenience.

Note

- “Open Folder” and “Resolution Setting” may not work on some versions of Android.

Taken photos go to “DCIM/EmoCam” - If orientation is incorrect, try rotate your phone (portrait >> landscape >> portrait ), it could solve cases.

** The cause of this bug is unknown; it is due to versions of Android. - This is beta/pilot version: Sorry for any bug you may encounter. If you found any bug/error, reporting to pujana[dot]p[at]gmail.com would be very appreciated

- The performance is still under improving. In addition, the performance also depends on device CPU.

- The models are trained using face data of westerners due to limitation of datasets.

Its accuracy would be reduced upon using with Asian people. I’m still collecting data to solve this. - High resolution photo will be available.

- Stabilizer will be automatically off during multi-face detection.

Open for Facial Dataset Donation: If you have facial expression dataset (image), and are willing to donate for improving our system, please contact us. The donators’ name will be credited on this page.

In order combine with our existing dataset, we have requirements for new data as follows:

- Face Data for currently existed Emotion: there should be at least 10K face images for the emotion

- Face Data for new Emotion: there should be at least 100K face images for the emotion.

- Examples of Public Dataset: https://en.wikipedia.org/wiki/Facial_expression_databases

- We can’t afford commercial dataset currently, so please don’t contact for sale purpose

Pujana[dot]P[at]gmail

- Happy

- Surprise

- Disgust

- Anger

- Neutral

- Fear

- Sad